- Published on

Automating Rental Market Alerts with AWS and RentCast - A Serverless Project for Investors and Engineers

- Authors

- Name

- Christopher Clemmons

I created rental market trends newsletter using AWS Lambda, SES and Terraform and in this article, I'll explain why tools like this is a game saver in the real estate investing business and how you as a cloud solutions expert can help a similar business with a need for something like this.

Why is a rental market trends newsletter needed?

Real estate investors spend countless hours refreshing Zillow, Redfin, or digging through the MLS just to keep tabs on rent comps and market stats. There's no passive, hands-off way to stay updated on a target ZIP code unless you shell out for a premium tool.

Let's be honest...most of those tools are overpriced and overbuilt.

You don't need a Swiss Army knife when all you really want is a scalpel.

Imagine this…

You're a multifamily investor evaluating Greenville, SC (ZIP 29611) for your next BRRRR deal.

On Monday, rent comps look solid, with DOM (days on market) hovering around 40. Everything pencils out.

By Friday, you check again. The average list prices have jumped 8%, and new comps hit the market. That deal you were underwriting just fell out of your buy box. You missed the window.

Sure, the 8% increase is great… but only if you were holding the asset — not if you were still "analyzing".

Here's how to tie it back to why missing it matters:

Missed Equity Play: If you were looking at that $2M property Monday, and market data now supports $2.2M, the seller may have updated their price — or worse, another investor grabbed it before you refreshed your comps.

Loan-to-Value Just Shifted: If appraisals are going up and you're still basing your offer on old numbers, your conservative underwriting now makes you uncompetitive.

Missed Refinance Window: If you're doing BRRRR and comp values increase, you want to refinance while those comps are fresh. Waiting too long may mean you miss the appraisal high point.

Here's some other benefits to catching these kinds of trends early:

Negotiate Harder on Properties You're Already Watching: Knowing the neighborhood's trending up helps you justify a higher ARV, which makes your offer more appealing.

Justify Higher Rents to Lenders or Partners: If you can show the market's moving, it supports pro forma numbers that LPs, lenders, or underwriters might otherwise question.

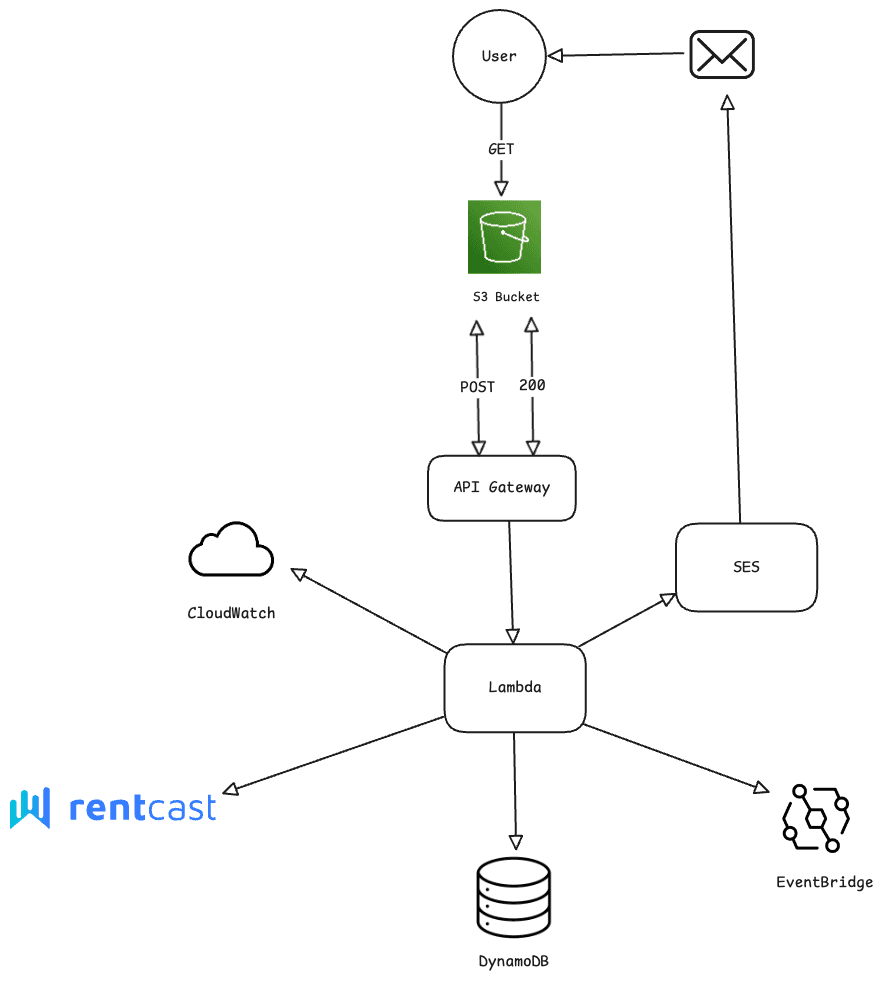

Architecture overview

When designing this, I had a few goals in mind:

- Make it reliable

- Make it scalable

- Make it cheap

After some thought, I decided to go with the solutions below. I'll also explain why I went with this design.

Why S3 over EC2?

Great question? An EC2 would have still allowed me to host the frontend of the application but would require more steps. If I hosted this app on an EC2 I would need to do the following:

- Build the server

- Install NGINX

- Update security groups

- Keep it on 24/7 just in case a request comes in

- Configure autoscaling manually

This would have not only cost more, but it would add more complexity to the app than we needed.

All I need is a form dude!

S3 is not only cheap as hell, it autoscales and serves HTML files just fine.

I can say the same thing about the Lambda function as well. One Lambda runs on a schedule using EventBridge and the other one only runs when someone signs up which is not that frequent. I am only being billed for when it runs. It only takes a couple seconds to run so again, very cheap stuff.

Infrastructure as Code with Terraform

Terraform made this project super easy. Deploying and destroying resources that needed be rebuilt and modified was a breeze. No more clicking around in AWS console. I also used Terraform to create IAM policies and security rules to only allow the form to talk to the backend and Database through the API Gateway.

Terraform.tfvars

This module is used to store secrets. variables.tf will read this files when deploying to it doesn't have to live in your commit history. Think of it as a .env file.

API Gateway

This will act as the entry point to the API. It will allow users to make POST requests to the service to save new subscribers and their zip code preferences to the database.

Real Time Real Estate Market Data from RentCast

RentCast has an API service that allows you programmatically fetch rental market trends. It uses a freemium pricing tier which is great for managing costs.

SES (Simple Email Service)

SES allows us to safely send email to users from the application. You get 3K free outbound emails per month, afterwards it's only .10 for every 1K emails.

EventBridge

Event bridge will be responsible for making sure any cron jobs from the Lambda function runs on schedule. EventBridge handles this for you after detecting a cronjob in your Lambda.

CloudWatch

CloudWatch will be used to monitor logs and errors in case we get them. Using this as a clue to find errors helps resolve issues faster.

Preparing the Lambda

In the terraform configuration, along with the standard variables needed to deploy cloud resources, I also needed to ensure my Lambda had the necessary permissions to save new users to the database and to send emails using SES.

First, I needed to create an IAM role for the Lambda to assume.

resource "aws_iam_role" "lambda_exec_role" {

name = "lambda_exec_role"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "lambda.amazonaws.com"

}

}]

})

}

Afterwards, it get's attached and modified to support actions to allow us to write to the database and send emails.

When defining the Lambda function, I included environment variables for the table name, the sender email and RentCast for safety and to allow our Lambda function to be ready out the box when the terraform configuration is applied.

resource "aws_lambda_function" "submit_form" {

function_name = "submit_form_lambda"

role = aws_iam_role.lambda_exec_role.arn

handler = "index.handler"

runtime = "nodejs18.x"

filename = "${path.module}/lambda/submit_form.zip"

environment {

variables = {

TABLE_NAME = aws_dynamodb_table.subscribers.name

EMAIL_FROM = var.email_from

RENTCAST_API_KEY = var.rentcast_api_key

}

}

depends_on = [aws_iam_role_policy.lambda_custom_policy]

}

Next, I wrote the code that uses the Axios npm library to fetch for market statistics from RentCast. This accepts a zip code as an argument and then returns the stats in the response back to the user. This code is executed in the main handler function.

async function fetchMarketStats(zipCode) {

try {

const response = await axios.get('https://api.rentcast.io/v1/markets', {

params: {

zipCode,

dataType: 'All',

historyRange: 6,

},

headers: {

accept: 'application/json',

'X-Api-Key': RENTCAST_API_KEY,

},

})

console.log('RentCast Market Stats Response:', JSON.stringify(response.data, null, 2))

return response.data

} catch (err) {

console.error('Failed to fetch market statistics:', err.message)

return {}

}

}

Creating a cron scheduler in EventBridge

EventBridge allows you to run Lambda functions on a schedule without having to write any code. I created the event rule and then attached it to the Lambda function that submits the daily real estate market reports.

resource "aws_cloudwatch_event_rule" "daily_8am" {

name = "submit-reports-daily-8am"

schedule_expression = "cron(0 8 * * ? *)" # 8 AM UTC daily

description = "Triggers the submit_reports_lambda every day at 8 AM UTC"

}

resource "aws_cloudwatch_event_target" "submit_reports_target" {

rule = aws_cloudwatch_event_rule.daily_8am.name

target_id = "submit-reports-lambda"

arn = aws_lambda_function.submit_reports.arn

}

resource "aws_lambda_permission" "allow_eventbridge" {

statement_id = "AllowExecutionFromEventBridge"

action = "lambda:InvokeFunction"

function_name = aws_lambda_function.submit_reports.function_name

principal = "events.amazonaws.com"

source_arn = aws_cloudwatch_event_rule.daily_8am.arn

}

Final Thoughts

This project wasn't just a technical showcase — it was a demonstration of how to turn a real business pain point into a scalable, cost-effective cloud solution.

I architected this system to mirror the needs of a real estate analytics platform that delivers ZIP-code-specific rental insights to subscribers daily. By leveraging AWS Lambda, EventBridge, SES, S3, and Terraform, I built a fully serverless, low-maintenance, and production-ready system that can scale with demand — without scaling cost or complexity.

Here's what I delivered:

✅ Event-driven automation with reliable cron scheduling

✅ Secure, API Gateway-triggered Lambda functions

✅ Scalable, structured data storage with DynamoDB

✅ Infrastructure as Code using Terraform for repeatable, auditable deployments

✅ Strategic cost optimizations — like choosing S3 and Lambda over heavier compute options

If you're building a SaaS product, modernizing a legacy system, or just tired of overcomplicated infrastructure, I can help you design and deploy solutions that work.

This is how I approach every project: ownership, clarity, and business-aligned architecture.

📬 Connect with me:

Let's turn your next idea into a high-leverage system that scales.